Suppose you’re given some clear question with a well-defined answer. Computer scientists often like to consider number-theoretic questions, such as “Does 19 divide 1547 evenly?”

It seems quite natural to try to assign to this problem some sort of measure of difficulty. For example, from an intuitive perspective, it’s easier to figure out whether 19 divides 77 than whether it divides 1547. And, figuring out whether two divides 1548 is totally trivial. And, of course, there are much harder problems, like counting all the prime numbers below 1548 .

Some problems’ difficulties take a second to figure out. For example, is it harder to figure out what the square root of 15,129 is or to count the squares less than 15,130? Intuitively, it might seem like the second one should be harder, but really, the answer to the second question is the same as the answer to the first–15,129 is 123^2, and there are 123 squares less than 15,130 (not counting zero), one for each positive integer less than or equal to 123. So the first problem is exactly as difficult as the second.

So, some problems’ difficulties are intuitively comparable. Some are still clearly comparable, but realizing that isn’t completely trivial. But, some problems just seem terribly unrelated. For example, is it easier to count the primes less than 78 or to tell whether 1547 is prime? Is it easier to count the squares less than 123,456 or count the primes less than 78? For questions like these, it seems likely that the actual answers actually depend on our definition of difficulty.

So, we need a rigorous definition of difficulty. The field that studies such definitions and categorizes problems according to their difficulty is called complexity theory, and it’s one of my favorite branches of mathematics. What follows is an NSD-style introduction to the field:

[toc]

A Me-Based Notion of Difficulty

Intuitively, if a problem is really easy, then I can just tell you the answer immediately. For example, it takes any respectable nerd no time at all to recognize that 77 is seven times eleven, so it’s pretty damn easy to figure out that 19 doesn’t divide 77 evenly. Harder problems take longer to solve. Therefore, we might measure difficulty in time–A problem’s difficulty is defined as how long it takes a particular person to answer it. Since I’m a person, we’ll use me.

As it happens, I’m much faster at figuring out whether 19 divides 1547 (It doesn’t: 1547 = 7 * 13 * 17) than I am at counting the primes less than 78 (There are 21). So, I’m pretty convinced that the first problem is much, much easier.

But, this obviously isn’t a very nice definition. Different people take different amounts of time to solve the same problem. For example, it happens that I think about small prime numbers a lot (I really am very nerdy), and I’m a bit slow for a nerd when it comes to multiplying three-digit numbers together. So, I’m much faster at counting all the primes less than 78 than I am at taking the square root of, say, 15,129. I’m not really sure if that’s true for everyone, though.

Even if we all just agree that I’m the official arbiter of difficulty (Donations to support my re-election campaign can be sent to…), we still run into the problem that it doesn’t always take me the same amount of time to solve every problem. If, for whatever reason, I get tripped up when trying to figure out if 67 is prime or if I lose count midway, then I might end up solving the square root problem faster than I count the small primes. Little variations like this can easily change the time that it takes me to solve a problem by a factor of ten in practice. So, our measure is pretty damn inexact.

Plus, humans aren’t the best at answering questions. How, for example, do we compare two questions that I can’t even answer without help, like whether 22,801,763,489 is prime (It’s actually the billionth prime) or how many primes there are below 100,000 (There are 9,592)? Should we just label both of these problems impossible to solve? What do we do if I give the wrong answer to a problem?

There are many more hurdles too, but I’d rather introduce them later.

So, this simply isn’t a well-defined notion of difficulty.

From Me to My Laptop

Since I’m a soon-to-be computer scientist, my next attempt at a definition of difficulty naturally involves computers: A problem’s difficulty is defined as the time that it takes my laptop to solve it. (If we’re worried that my laptop’s speed might vary–and it does to an infuriating degree–we could imagine replacing my laptop with a formal mathematical model of a computer.)

So, let’s write some simple computer programs that solve two problems and see how long it takes them to run on my laptop. I’ll pick the problem of figuring out whether 1547 is divisible by 19 and the problem of counting the primes less than 78. I’ll write them in English-style pseudocode so that laymen can read them, and I’ll make them extremely simple (and therefore sort of stupid–There are very simple optimizations possible here, and there are also much fancier and faster ways to test for primality that would completely change the timing.):

Find the remainder when you try to divide 1547 by 19. If it's 0, return true. Otherwise, return false.

Set count = 1 For every integer n between 3 and 78, do this: For every integer m between 2 and (n-1), do this: If m divides n, exit the loop. (Check divisibility as in the previous program.) If you reached the end of the loop, increment count. Output count.

(Nerds in the audience, you might want to pause for a sec and guess how much longer the second program will take. You should be able to guess within a factor of 50% or so.)

Clearly, these programs solve the problems. I ran versions of these programs (in Python) on my computer, and I discovered that it takes my computer about 0.00000053 seconds to determine that 1547 is in fact not divisible by 19 using the above method. It takes it 0.00033 seconds to count the 21 primes that are less than 78. So, the second program is about 622 times slower than the first. Intuitively, this seems reasonable enough. (Indeed, the second program does 760 remainder operations, so it makes sense that it’s roughly 700 times harder than one remainder operation.)

(It’s probably worth mentioning that Python is typically an extremely slow language. I’m not very knowledgeable about these things, but I think it’s safe to assume that the same programs would have run significantly faster in C. But, Python was more convenient for me, and we’re only interested in relative speed anyway. I used Python’s awesome library, timeit.)

So, on its face, this seems like a pretty sweet way to gauge the difficulty of a problem. There’s only one problem: I write stupid programs. There are much faster programs for counting primes, and any formal measure that depends heavily on my programming ability probably isn’t a great idea.

So, the obvious solution would be to define a problem’s difficulty in terms of the time that it takes the fastest program possible to solve it on my laptop. Finding the fastest program might be a bit of a chore, but that seems like a small price to pay for a canonical measure of difficulty. Unfortunately, this doesn’t work because you can cheat:

Cheating

You might notice that I didn’t bother to check if two was prime in my second program. Instead, I just took for granted that two is prime, starting at three instead and initiating my counter at one instead of zero. I did this because the line “For every natural number between 2 and (n-1)” doesn’t behave correctly when n is two. This was obviously a trivial change, but it does speed up my program by a tiny bit. So, this gives us a way to try to write a faster program: Why not start at four instead of three? And why not five? And then why not 42?

If we take this idea to its extreme, we end up just not bothering to test any numbers for primality at all. Indeed, here’s a program that solves the problem perfectly:

Output 21

That program runs in about 0.0000005 seconds on my computer (in Python, using a function with a return statement), about 700 times faster than the first program that I wrote to solve the same problem. (Interestingly, on my other laptop, this program is twice as fast, even though the other programs are all about the same. I have no idea why.)

That might seem like cheating, but it’s clearly within the rules–This program answers the question. And, of course, this strategy is not limited to that specific problem. If we want a computer program that solves one specific problem with one unique answer, then we can always just write a computer program that has exactly one step: Outputting the correct answer. All of these programs will run in more-or-less the same amount of time, so this makes our definition of difficulty completely useless!

This is a big hurdle. Any question with a unique answer can be “solved” with absolutely no understanding of the problem just by announcing the answer, and any computer program that happens to output the correct answer–no matter how unrelated he program is to the actual question–is a “solution.” This horribly violates the intuitive notion that harder problems take more work to solve.

We could try to get around this problem by requiring that the computer program that we use to solve the problem must be “simple” or “natural” or “do real work”, but who’s to say what qualifies as such? Is it natural to leave out two? Are extremely complex algorithms developed by computer scientists like the AKS primality test or the general number field sieve natural? What if I write some complicated computer program that no human being can comprehend (Doing so is actually really easy), and it happens to output the correct answer to some very difficult problem very quickly? Who decides if that program “does real work” if we can’t even understand it?

If we’re going to use a subjective term to define what programs count, then we might as well return to our intuitive notion of difficulty–or to our me-based approach.

From 1547 to n

Fortunately, there is a solution to this problem, though it might seem a bit messy at first.

The idea is that cheating is only possible on a finite set: I can trivially write a computer program that very quickly outputs that 1547 isn’t prime without doing any “real work”, or that stores a long list of all primes below a certain number and quickly tells me whether or not a number in the relevant range is prime, simply by checking if it’s in the list.

But, if I want to write a computer program that will tell me if any number that some user inputs is prime, then I can’t possibly cheat and maintain a list of all prime numbers. Any list will necessarily have a highest value, and above that, if I want to tell the user whether his input is prime or not, I have to do “real work.”

Here’s a program that does real work–It’s just a generalization of the earlier program to checking the divisibility by 19 of arbitrary numbers instead of just 1547.

Set n to the user's input. Find the remainder when you try to divide n by 19. If it's 0, return true. Otherwise, return false.

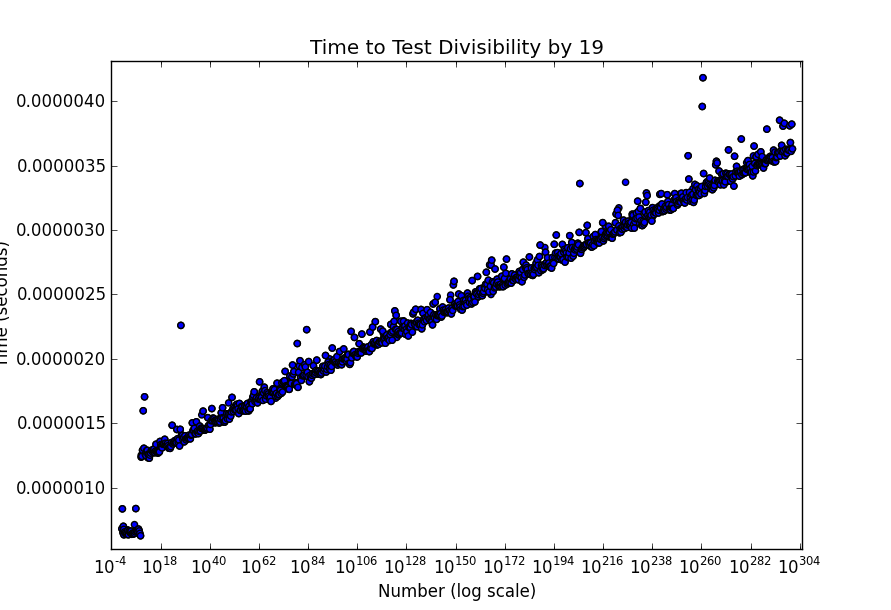

Of course, we can no longer ask how long it takes this program to run because the answer depends on the input. We typically do this over very large scales when possible. Here’s what that dependency looks like for n between 1 and 10^300, on a log scale (There are very roughly 10^80 electrons in the universe, so 10^300 is a ridiculously large number that basically only exists because we can write it down):

(Click to enlarge.)

So, we learned some things.

There’s obviously some variation due to the natural fluctuation’s in my computer’s speed, and there’s some variation due to the specific implementation that I used. (That big jump around 10^9 is a result of conversion from ints to longs.)

But, more interestingly, there’s one distinct pattern: Stuff goes up linearly. Now, of course, this is a log scale, so calling something linear is a bit silly, but it’s actually traditional (for a good reason that I won’t get into) to talk about this stuff on log scales.

Different problems will have very different-looking graphs. Here’s the graph for the program that counts the primes less than n, where n ranges from 1 to 500 (10^300 would take my computer a while…):

Obviously, this is a different beast. This graph looks exponential, so you can see immediately that this problem gets hard fast. If, for example, I try to count the number of primes less than 500,000 with this program, my computer just freezes. Compare that with the previous program, which ran instantly on inputs that would take me a while just to type. That makes it pretty clear which problem is harder.

But, this isn’t perfectly satisfying. We wanted to know how hard a question is. We wanted a number, and we got this weird graph. If someone asks me how hard it is to count the primes less than a number, is the answer a messy graph? Would anyone be reasonably satisfied by such an answer?

And, wait a minute… What has this new level of abstraction actually bought me? Suppose I create a list of all the primes under 500,000. (There are 41,538 primes less than 500,000, so a computer can easily store a list of them.) Then, I can write this program:

If the user's input is less than 500,000: Count the number of primes less than it on the list and output the result. Otherwise: Count the entire list. Using the same method as before, count the number of primes between 500,000 and the user's input. Output their sum.

This program will be much faster than the original for numbers less than 500,000–Instead of freezing my computer, it’s essentially instant. For numbers greater than 500,000, it gets a huge head start before it has to do any “real work.” Does the existence of such a program show that the problem is easier? Of course not. This is very similar to the “cheating” problem that we tried to solve earlier.

n Takes a Trip to Infinity

The key solution to these two problems–the messy graph problem and the cheating problem–is to take a limit. The intuition is pretty simple: We’re not interested in a program’s behavior on one specific input because you can cheat. We don’t want to solve this problem by taking a large chunk of inputs because you can still cheat; plus, now you have more information to worry about. So, we instead analyze the program’s behavior as n approaches infinity because, well, you can’t cheat at infinity.

Based on the graph I made above, adding another digit to the number increases the time it took my program to decide if a number was divisible by 19 by about 0.000000012 seconds. Suppose, for the sake of argument, that this pattern continues out well beyond 10^300. Suppose it continues to 10^600 and 10^1000 and to 10^(10^1000) and 10^10^10^10^… with 10^10 such exponents, and even further to numbers that are so large that we can’t even possibly write them in this way. Indeed, suppose it continued to infinity.

Well, then, we could assign a run-time to any number. If a number has n digits, then the program will take about 0.000000012 * n seconds to calculate whether its divisible by 19.

Now, we can write cheating programs that are much faster on smaller numbers, but we’re only concerned with the limit as n goes to infinity, so that’s fine. You also might be able to write cheating programs that save some constant amount of run-time–Say a program that runs faster by an hour because it starts out with a list of some useful information that allows it to skip some crucial step. But, as n goes to infinity, there’s no real difference between 0.000000012 * n seconds and 0.000000012 * n – 3 hours.

This might seem a little unfair, but it’s really the best we can do.

Who needs constant factors?

In fact, computer scientists often go one step further: They often drop the 0.000000012 entirely. We instead use a lazy notation–We simply say that the run-time is “O(n)”, which means that we know a program that solves the problem whose run-time as the number of digits in the input, n, goes to infinity is given by k * n, where k is some constant–We don’t even bother to write the constant factor. We might also just say that the program “runs in time linear in the number of digits.”

There are a couple reason why we do this:

First of all, the constant k (the 0.000000012) is obviously dependent on the computer. If I get a computer that’s twice as fast, it will halve the constant. We can get around this by choosing some abstract definition of a computer or using one canonical computer (like my laptop, or maybe a special laptop that’s kept in some vacuum chamber somewhere, similar to how they keep the kilogram). But, which abstract definition or computer would we choose? By choosing instead to simply say that the run-time is linear, we ignore all that. Any modern computer (and almost all modern theoretical models of computers) can run an O(whatever) algorithm in O(whatever) time, but it’s certainly not the case that they’ll all have the same constant factors.

Second, computer scientists deal with a terrifyingly wide range of run-times. Some programs, like my nineteen-divisibility checker run in time linear in the number of digits. Others, like my prime-counting program are exponential–They might run in, say, O(2^n) time. If one program takes k_1 * n seconds to run, where n is the number of digits in the input, and another takes k_2 * 2^n seconds to run, then no matter how much smaller k_2 is than k_1, there will always be inputs that are sufficiently large that the first programs runs incomparably faster than the second. Indeed, for most values of k_2 encountered in practice, it’s often fairly easy to find an input that would take an O(2^n) program longer than the entire age of the universe to solve. Even the difference between O(n) and O(n^2) time is enormous and makes the constant factors seem silly.

Of course, this level of abstraction sometimes goes to far. It’s often very important to lower the constant factor as much as possible, and there are certainly quadratic-time algorithms that are much faster in practice than linear-time algorithms because of lower common factors.

Who cares?

I personally think that this is interesting by itself–The question of how hard a problem is seems quite naturally, and even just this brief introduction to the problem shows that it’s actually quite subtle and deep. But, it’s also of huge practical importance for two reasons:

- Often, we want to solve problems. Knowing if a particular problem is easy or hard to solve is therefore quite advantageous on its own. For example, I don’t suggest wasting your time trying to figure out the fastest way to visit, say 100 different airports–The vast majority of computer scientists are pretty sure modern computers would take much longer than the age of the universe to solve that problem. That’s a good thing to know before you start.

- Hard problems are actually really useful. In particular, we use them for cryptography. The foundation of much of modern cryptography is one assumption: Some problems are extremely difficult to solve (i.e., they would take more than the age of the universe to solve), but it’s very easy to check the solution. For example, we believe that it’s extremely hard to find a factor of a very large number–We think that you can’t do that much better than trying every single possible factor, and that would take greater than the age of the universe to do for numbers that are hundreds of digits long. But, as I demonstrated above, it’s very easy to check whether one number is a factor of another once you have a factor to try–even for numbers that are thousands of digits long. It might surprise you to learn that (1) we haven’t proven that it’s actually hard to factor large numbers, and (2) essentially all of modern practical cryptography–including the encryption that your bank uses to prevent people from stealing your money–is founded on this assumption. That’ll be the topic of my next post.

Anyway, that’s my rant. I hope you enjoyed it.

If you liked this post, you might enjoy my poker blog as well. And, you might want to follow me on Twitter or subscribe to this blog’s RSS feed. I’ve got a lot of posts in the pipeline–They’re all just about almost done–but I’m incredibly busy at the moment, so the gap between almost done and done is a big one.

There’s a reason we sometimes still program with good ‘ol Fortran 77 and it is that the way some calculations are done, are extremely slow in Python. C’s numerical recipes are good, but almost nothing comes close to F77 when it comes to basic calculations, because at the time each microsecond saved was immensely important. It’s sad that amazing libraries like scipy sometimes take 10x longer to do a filtering or something like that, because they are not optimized and just brute force it – so many more clever ways of doing it (a la Hacker’s Handbook). They know about it and if you, the audience can contribute, they would be thankful. “Unfortunately”, computers are so fast, that almost nobody notices, except people at the root of the problem.

Anyway, rant over, but something to consider when calculating computation times, especially if you’re using libraries.

I don’t think scipy brute forces very much that it doesn’t need to. It’s pretty sophisticated WRT using optimal asymptotic run-time algorithms. Python is very slow in general, of course–There’s a big sacrifice because it’s interpreted as opposed to compiled, and many of its data structures are inherently slow. But, the slowdowns are of course constant factor increases, not changes in the underlying asymptotic run-time of the function.

Anyway, I used Python because (1) It’s awesome and my default for everything and (2) The timeit module is amazing. If I wanted to write a post about the fastest possible run-time for things, I would’ve used C (because I’m not badass enough to know F77), but for relative run-time, Python with timeit is pretty sick.