Hi, nerd blog! (This is a post that I wrote a long time ago and then never published. I figured it would be nice to publish it on March 14th.)

Today, we’re interested in the Gaussian integral

![]()

By a change of variables, we immediately see that ![]() is proportional to

is proportional to ![]() . But, what is the constant of proportionality? It’s actually nicer to ask a slightly different question: what is the unique value of

. But, what is the constant of proportionality? It’s actually nicer to ask a slightly different question: what is the unique value of ![]() such that

such that ![]() . A quick numerical computation shows that

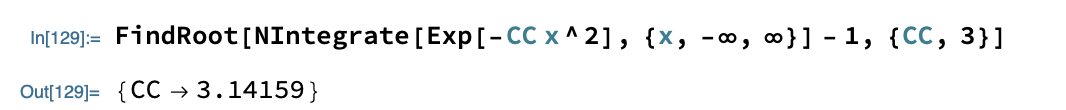

. A quick numerical computation shows that ![]() . E.g., here’s some Mathematica code to find this value:

. E.g., here’s some Mathematica code to find this value: .

.

This constant ![]() is so important for this blog post that it is worth giving it a name. So, I looked through the Greek alphabet for a nice letter that doesn’t get used much and chose the obscure lowercase letter

is so important for this blog post that it is worth giving it a name. So, I looked through the Greek alphabet for a nice letter that doesn’t get used much and chose the obscure lowercase letter ![]() —spelled pi in English, and pronounced like “pie”. In other words, by definition

—spelled pi in English, and pronounced like “pie”. In other words, by definition ![]() . (If this implicit definition bothers you, we can equivalently just define

. (If this implicit definition bothers you, we can equivalently just define ![]() . But, I find the implicit definition to be more elegant.)

. But, I find the implicit definition to be more elegant.)

So, we have this brand new mysterious constant ![]() . What should we do with it? It is of course natural to try to find different expressions for it (though our integral expression can already be used to compute it to quite high precision). A first idea is to apply the change of variables

. What should we do with it? It is of course natural to try to find different expressions for it (though our integral expression can already be used to compute it to quite high precision). A first idea is to apply the change of variables ![]() to obtain

to obtain

![]()

![]()

This mysterious identity will play a key role later. We could, of course, find other identities involving this new constant ![]() . But, I thought instead I’d jump ahead to a rather obscure fact about the relationship between

. But, I thought instead I’d jump ahead to a rather obscure fact about the relationship between ![]() and a circle.

and a circle.

Our constant’s relationship with circles

In my opinion, the Gaussian distribution is far more interesting in dimensions larger than one. In particular, consider the distribution on ![]() given by the probability density function

given by the probability density function

![]()

![]()

In fact, up to scaling, this distribution is the unique continuous radial product distribution—i.e., the unique distribution such that ![]() can be written both as a function only of the norm of

can be written both as a function only of the norm of ![]() ,

, ![]() for some continuous function

for some continuous function ![]() , and as a product of functions of its coordinates,

, and as a product of functions of its coordinates, ![]() . This makes the Gaussian a uniquely powerful tool for reducing complicated multi-dimensional problems to one-dimensional problems.

. This makes the Gaussian a uniquely powerful tool for reducing complicated multi-dimensional problems to one-dimensional problems.

For example, suppose that for some strange reason we wish to know the circumference of a circle with radius one. (If we were less civilized mathematicians, we might instead set the diameter to be equal to ![]() , so that the radius would be

, so that the radius would be ![]() .) We can try to write this as some kind of path integral or something—and suffer quite a bit in the process—or we can use the following beautiful trick. We can write

.) We can try to write this as some kind of path integral or something—and suffer quite a bit in the process—or we can use the following beautiful trick. We can write

![]()

where

![]()

So, surprisingly, our mysterious constant ![]() is actually intimately related with the circumference of a circle. (If we were less civilized mathematicians, we might even have simply defined

is actually intimately related with the circumference of a circle. (If we were less civilized mathematicians, we might even have simply defined ![]() to be the circumference of a circle with radius

to be the circumference of a circle with radius ![]() .)

.)

What’s so special about two dimensions? Surface area of n-spheres.

But, why stop in dimension ![]() ? This same one neat trick is just as useful in higher dimensions. E.g., what is the surface area

? This same one neat trick is just as useful in higher dimensions. E.g., what is the surface area ![]() of a unit sphere in

of a unit sphere in ![]() dimensions? (Conventionally, we write the

dimensions? (Conventionally, we write the ![]() -sphere as

-sphere as ![]() because it as an

because it as an ![]() -dimensional object that happens to be embedded in

-dimensional object that happens to be embedded in ![]() -dimensional space. This is why I write

-dimensional space. This is why I write ![]() for its surface area.) Well, we have

for its surface area.) Well, we have

![]()

![]()

If the Gamma function intimidates you, that’s fine. (It certainly intimidates me.) We can go a bit further by remembering that for integers ![]() ,

, ![]() , while

, while ![]() . (Both of these identities follow from the relation

. (Both of these identities follow from the relation ![]() , which follows from integration by parts, together with the values

, which follows from integration by parts, together with the values ![]() and

and ![]() .)

.)

Then, we see that the surface area of the unit sphere in ![]() dimensions is

dimensions is

![Rendered by QuickLaTeX.com \[\sigma_1^{(n-1)} = \begin{cases}\pi^{n/2} \cdot \frac{2}{(n/2-1)!} &n\text{ even}\\\pi^{(n-1)/2} \cdot \frac{2^n \cdot ((n-1)/2)!}{(n-1)!} &n\text{ odd}.\end{cases}\]](http://www.solipsistslog.com/wp-content/ql-cache/quicklatex.com-7093395acfb9701717087f97c5b73145_l3.png)

We can also plug in low values of ![]() to see what we get. E.g., I have heard that some people are interested in the case when

to see what we get. E.g., I have heard that some people are interested in the case when ![]() and

and ![]() . Plugging in, one sees that the circumference of a circle with radius one is

. Plugging in, one sees that the circumference of a circle with radius one is ![]() (which, ok, we already saw before), and that the surface area of a sphere with radius one is

(which, ok, we already saw before), and that the surface area of a sphere with radius one is ![]() . But, we can easily go farther: the surface area in four dimensions is

. But, we can easily go farther: the surface area in four dimensions is ![]() , and in five dimensions, it is

, and in five dimensions, it is ![]() .

.

And, we can of course find the volume of the unit ![]() -ball by computing a simple integral

-ball by computing a simple integral

![]()

In short, I think this mysterious constant ![]() is rather nice. Perhaps it will find other applications.

is rather nice. Perhaps it will find other applications.